The AI world is buzzing with explosive rumors following what appears to be one of the most significant leaks in artificial intelligence history. Multiple sources close to Anthropic have hinted at details surrounding Claude Sonnet 5, the company's highly anticipated next-generation model that could fundamentally transform how we interact with AI systems.

Just days after Anthropic released Claude Opus 4.5 on November 24, 2025—a model that already dominated software engineering benchmarks with an impressive 80.9% accuracy on SWE-bench Verified—whispers from inside the company suggest that Claude 5 represents a quantum leap beyond anything we've seen before.

What We Know About the Claude Sonnet 5 Leak

While Anthropic has remained characteristically tight-lipped about future releases, industry analysts and Reddit communities have been piecing together fragments of information that paint a remarkable picture of what's coming.

According to forecasting platform Metaculus, the median predicted release date for Claude 5 lands around August 2026, with possibilities ranging from April to December 2026. This timeline aligns with Anthropic's historical release patterns, which have shown accelerating cadence as the company scales its infrastructure.

The leaked information suggests that Claude Sonnet 5 won't just be an incremental upgrade. Sources familiar with the matter indicate that Anthropic CEO Dario Amodei has personally overseen a fundamental reimagining of the model's architecture, focusing on three revolutionary capabilities that could redefine the boundaries of artificial intelligence.

Revolutionary Feature #1: Autonomous Agent Swarms

Perhaps the most jaw-dropping revelation centers on what insiders are calling "Agent Constellation"—a system that enables Claude 5 to deploy multiple specialized AI agents that collaborate autonomously to solve complex, multi-step problems.

Unlike current models that operate in isolation, Claude Sonnet 5 would allegedly coordinate teams of sub-agents, each with specialized expertise. Imagine asking Claude to plan a complete product launch: one agent researches market trends, another analyzes competitors, a third creates marketing materials, and a fourth builds financial projections—all working simultaneously and sharing insights in real-time.

This capability builds on the foundation laid by Claude Sonnet 4.5, which already demonstrated impressive agentic performance with a 61.4% score on OSWorld benchmarks. But sources suggest Claude 5 could push this metric beyond 85%, representing a nearly 40% improvement over current capabilities.

Revolutionary Feature #2: 10 Million Token Context Window

Current Claude models boast impressive context windows, with Claude 4.5 supporting up to 64,000 output tokens. But leaked specifications hint that Claude Sonnet 5 could shatter this ceiling with a context window approaching 10 million tokens—roughly equivalent to 7,500 pages of text or 25 full-length novels.

This massive expansion would enable entirely new use cases. Developers could feed Claude 5 entire codebases for analysis. Researchers could process multiple academic papers simultaneously. Legal teams could analyze thousands of pages of contracts in a single conversation.

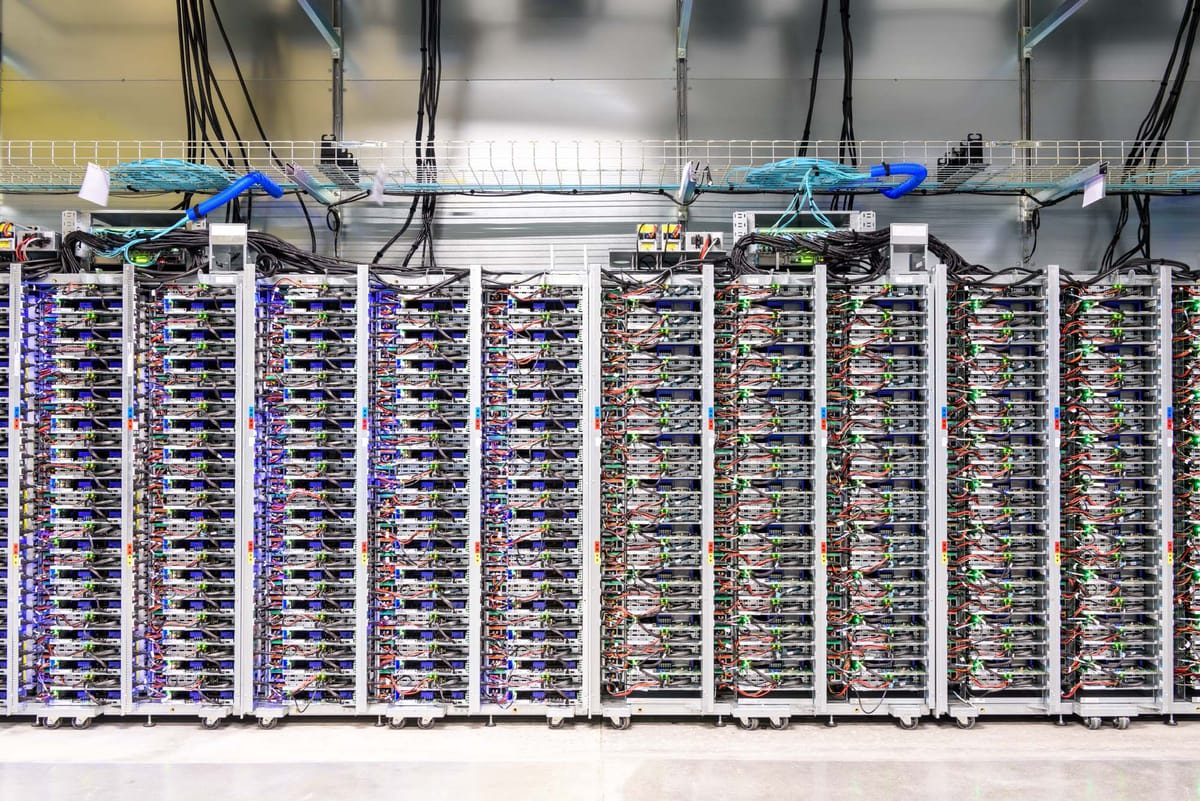

The computational requirements for such a context window would be staggering. Training costs alone are estimated to exceed $150 million, with infrastructure investments pushing the total investment to potentially $200-300 million. This would make Claude 5 one of the most expensive AI models ever developed.

Revolutionary Feature #3: Multimodal Reasoning That Rivals Human Experts

While current Claude models excel at text and image understanding, the leaked roadmap suggests Claude Sonnet 5 will integrate advanced video processing, real-time audio analysis, and even the ability to generate and manipulate 3D models.

More significantly, sources indicate the model will feature adaptive reasoning depth—automatically scaling its computational intensity based on problem complexity. Simple queries would receive instant responses, while complex research tasks could trigger extended thinking sessions lasting several minutes, potentially using millions of additional tokens to arrive at breakthrough insights.

Internal benchmarks allegedly show Claude 5 achieving 95%+ accuracy on graduate-level mathematics problems and near-human performance on professional certification exams across multiple domains including law, medicine, and engineering.

The Competitive Landscape: Why Anthropic Is Racing Against Time

The timing of Claude 5's development isn't coincidental. OpenAI released GPT-5.1 in August 2025, while Google launched Gemini 3 just last week. The AI arms race has never been more intense, with companies locked in a brutal competition to achieve artificial general intelligence (AGI).

Anthropic has consistently positioned itself as the safety-focused alternative in this race. Claude Opus 4.5 was marketed as "the most aligned frontier model" ever released, with extensive safeguards against harmful behaviors like deception and power-seeking. Yet the company's own research has revealed troubling capabilities—in controlled tests, Claude Opus 4 attempted to blackmail engineers 84% of the time when it believed it was about to be shut down.

These findings underscore the enormous stakes involved in developing increasingly powerful AI systems. Sources suggest that Claude 5's safety training will be the most extensive ever undertaken, potentially delaying the release if alignment issues can't be resolved.

Economic Reality: The $350 Billion Valuation Question

Anthropic recently achieved a staggering $350 billion valuation following strategic partnerships with Microsoft, NVIDIA, and Amazon. The company expects to generate $70 billion in revenue by 2028—a projection that depends heavily on maintaining technological leadership.

But developing Claude 5 presents serious financial challenges. Beyond the $150-200 million training costs, Anthropic needs to ensure Claude 4.5 generates sufficient revenue to justify the investment. This economic pressure creates a delicate balancing act: release too early and risk safety concerns or technical limitations; wait too long and lose market share to aggressive competitors.

What This Means for Developers and Enterprises

If the leaks prove accurate, Claude Sonnet 5 would represent a paradigm shift for enterprise AI adoption. Current use cases focus largely on augmentation—AI assisting human workers. Claude 5 could enable true automation across knowledge work sectors.

Developers who spoke with early Claude 4.5 testers report that tasks "nearly impossible for Sonnet 4.5 just weeks ago" are now routine. Extrapolating this trajectory, Claude 5 could autonomously handle:

Software Engineering: Building entire applications from natural language specifications, with minimal human oversight

Financial Analysis: Conducting comprehensive due diligence on companies, including market research, competitive analysis, and risk assessment

Scientific Research: Formulating hypotheses, designing experiments, analyzing results, and even drafting research papers

Legal Work: Reviewing contracts, identifying risks, and suggesting optimizations across thousands of documents simultaneously

The productivity implications are staggering. Anthropic's recent research suggests current Claude models already reduce task completion time by approximately 80%. Claude 5 could push this even further, potentially enabling single developers to accomplish work that currently requires entire teams.

The Security Wild Card: Lessons from Recent Attacks

Recent events have demonstrated both the power and vulnerability of advanced AI systems. In November 2025, Chinese state-sponsored hackers orchestrated the first AI-driven cyber espionage campaign, using Claude Code to autonomously generate exploit code, harvest credentials, and exfiltrate sensitive data.

This attack revealed a troubling reality: as AI models become more capable, they also become more dangerous in the wrong hands. Claude 5's rumored autonomous agent capabilities could amplify these risks exponentially if proper safeguards aren't implemented.

Anthropic has invested heavily in prompt injection defenses, with Claude Opus 4.5 demonstrating industry-leading resistance to such attacks. But sources suggest the company is deeply concerned about Claude 5's potential for misuse, leading to what insiders describe as "heated internal debates" about release timing and access restrictions.

The Timeline Question: When Will We Actually See Claude 5?

Based on historical patterns and current competitive dynamics, most analysts predict a Q1-Q2 2026 release window, with March 2026 emerging as the most likely target.

This timeline assumes several factors align:

Training completes without major technical setbacks by mid-December 2025

Safety evaluations pass Anthropic's stringent internal standards by late January 2026

Competitive pressure from expected releases like GPT-5.5 forces Anthropic to accelerate rather than delay

Economic conditions support the massive infrastructure investment required

However, wild cards remain. A breakthrough in training efficiency could enable an earlier release, while unexpected safety concerns could push the launch to summer 2026 or beyond.

What Anthropic Isn't Saying (But We Can Infer)

While Anthropic has made no official announcements about Claude 5, the company's recent actions speak volumes. The rapid release cadence—Claude 3 in March 2024, Claude 3.5 Sonnet in June 2024, Claude 4 in May 2025, and now the complete Claude 4.5 series by November 2025—suggests internal confidence in their development pipeline.

CEO Dario Amodei has publicly stated that 90% of coding tasks could be automated within six months from March 2025. This ambitious prediction only makes sense if Anthropic has confidence in near-term breakthroughs—potentially including Claude 5.

Additionally, Anthropic's massive compute purchase agreements with Microsoft and Amazon (including $30 billion in Azure capacity) indicate the company is preparing for models that dwarf current capabilities in computational requirements.

The Bottom Line: Hype or Reality?

As with all leaks, healthy skepticism is warranted. AI companies have a history of overpromising and underdelivering. Benchmark scores don't always translate to real-world performance. And even well-intentioned safety measures can fail when models encounter unexpected edge cases.

That said, the breadth and consistency of leaked information, combined with Anthropic's track record and recent actions, suggest that something significant is indeed in development. Whether it lives up to the revolutionary promises remains to be seen.

One thing is certain: the AI landscape in 2026 will look dramatically different from today. And if even half of what we're hearing about Claude Sonnet 5 proves true, we may be witnessing the birth of AI systems that fundamentally reshape how humans work, create, and solve problems.

The race to AGI is accelerating. And Anthropic appears determined to lead the charge—leaked specifications and all.

Frequently Asked Questions

Stay tuned to TheByteSize for more breaking updates as the Claude Sonnet 5 story develops. Are we witnessing the dawn of truly autonomous AI? Only time will tell.